What My Students Had To Say About AI

Students shared how they felt about AI usage—for students and adults alike

Sometimes I feel like I’m living a double-life as a teacher.

On one hand, there is this very fascinating but also very frustrating AI conversation happening, one that since the release of ChatGPT I’ve tried my best to engage with—as I have lots of feelings and, amidst my questions and reservations, believe with deep conviction that the best path forward is through more conversation amongst educators and a commitment to remaining humble and open-minded. If this is going to be a paradigm shift in education, we need as many classroom voices and perspectives to be a part of that conversation as possible.

On the other hand, though, in my actual classroom? I haven’t really felt the weight of this potential paradigm shift all that much.

You see, we have sort of just been “doing our thing” this year: reading cool stuff and taping it into our spiral notebooks to annotate; jotting down quick-writes and reflections by hand; hopping onto Chromebooks only when we need to for exit tickets on Canvas or Google Forms; and, inevitably, completing longer writings on Google Docs I create and share with them—with most of the writing (if not all!) taking place within our classroom walls.

Also: students talk with each other. A lot.

I have zero doubt that the occasional AI usage is taking place—maybe even more than occasional!—but I don’t really worry too much at this point in the school year, where I feel like I’ve done the work to understand my students’ writing and they’ve done the work to believe in that writing enough to trust it across the finish line.

With a month left, here we are. (As always, it’s a good place to be.)

However, since there’s also that pedagogical curiosity of mine—one that humbly recognizes that, at some point, we may not be able to keep treading water within this eye of the hurricane that is AI’s increasing impact on education and pretty much everything else—so I finally decided to ask my students what they think, especially about the idea of teachers using AI.

Oh boy, did they have some things to say.

🗣️ “they will most likely forget the material they teach”

After finishing our research unit and submitting their projects last week, my sophomore English 10 classes had a solid chunk of a class period left to end the unit—leaving us with just enough time to have a classroom conversation about AI’s potential role in the classroom moving forward.

The first part of this discussion had students on their feet, as they travelled around the room in small groups to discuss and then “take a stance” on various questions around AI usage in education.

Among those that they explored:

Do schools need to integrate AI tools and learning strategies into classrooms?

Should teachers be using AI tools for lesson preparation and/or feedback?

Is it okay to have different standards and expectations around AI usage for teachers versus students?

My only framing going into this activity was to explain to them that a) this is increasingly a discussion taking place amongst many adults in education right now; b) there is very little consensus across educators, with lots of folks doing lots of different things; and c) student perspective often gets left out of these discussions—which was why I wanted to shut up and just listen to what they had to say.

Then the discussion amongst students commenced.

Pretty much a consensus across the three sophomore classes I facilitated this discussion with? A heavy amount of skepticism towards AI, particularly around usage by teachers—which many seemed completely taken aback by.

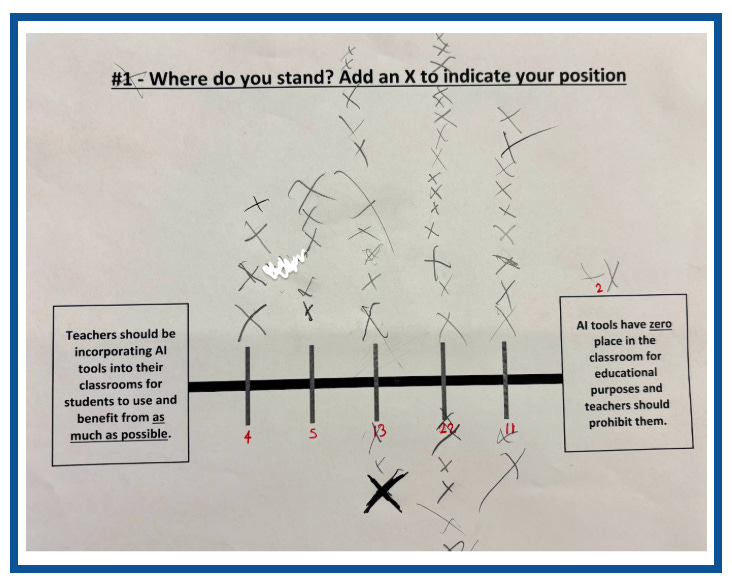

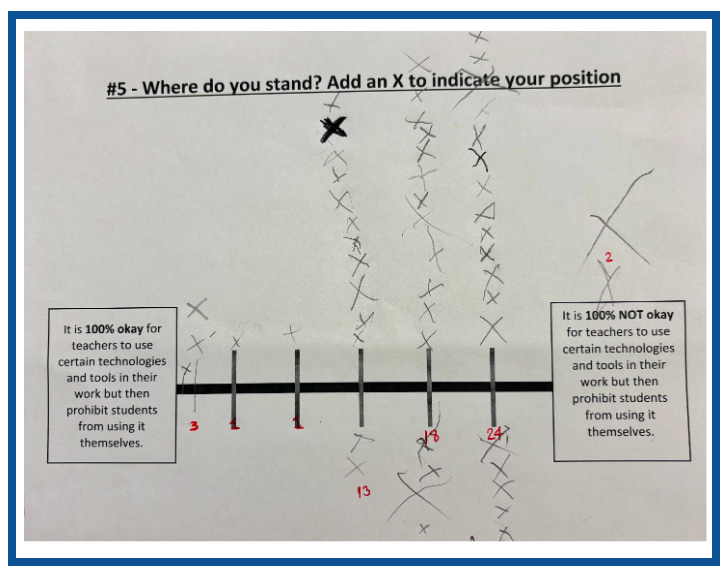

Here is the collected data from the gallery walk activity we started with:

61% of students strongly or somewhat believe that “AI tools have zero place in the classroom” (only 16% somewhat or strongly disagree with this statement)

66% of students strongly or somewhat agree that teachers “should not be using AI tools at all to prepare lessons or give feedback” (only 10% somewhat or strongly disagree)

71% of students are strongly or somewhat against “teachers using AI tools but prohibiting students from doing the same” (only 8% somewhat or strongly disagree)

So, yeah, initial takeaway for teachers and educators out there enthusiastically incorporating AI into their practices and classrooms—and advocating for others to do the same?

Maybe we need to pause and talk with our students a bit more first.

They have a lot to say.

🗣️ “they want to have their cake and eat it too”

While I know that New Yorker article on students “cheating their way through college” was much-discussed last week, I still find myself circling back to the New York Times article that had everyone’s attention the week prior: “Teachers Worry About Students Using A.I. But They Love It for Themselves.”

For me, this is what has been missing in these AI education conversations: the focus on the usage of AI by adults in education instead of just discussing the usage by students and the inevitable “students these days” sentiments that result.

How educators are navigating this moment, I believe, with AI is very much part of the conversation. Perhaps even the center of it.

So I went ahead and shared that New York Times article with my students to see what they thought about it.

Following the gallery walk discussion, students spent the next part of class silently reading the article. They then hopped into an online discussion post to share their “takes” with each other before silently reading and responding to the replies of classmates.

Some noteworthy things that were said in this online discussion:

“I get how that might save [the teacher] time but it never means it’s right.”

“…if a student uses A.I it is considered cheating, so shouldn't it be the same for teachers?”

“…if this continues we're gonna end up like the humans in Wall-E.”

“It seems as though they want to have their cake and eat it too”

“it's just another way that teachers are being hypocrites”

Not exactly building a culture of trust with this, are we?

I found that the bulk of the online discussion amongst students paralleled what was expressed in the gallery walk activity: students were largely against the use of AI in general by students and teachers alike—and outspokenly against (a) the use of AI for feedback and (b) inconsistent expectations for AI for adult usage v. student usage.

Some exceptions to this? Students were open to the idea of having opportunities to learn about how to successfully use AI going forward (48% in favor versus 32% against) and were mostly in favor of things such as increasing awareness of AI as a tool, etc., without it being integrated into all classrooms.

(Oh, and there was also one student who speculated quite confidently about the potential demise of the teaching profession as a result of AI: “teachers will be replaced with AI because it is cheaper and more efficient.”)

🗣️ “… it also will separate you from your students”

Out of all the things I heard and read throughout these discussions, one specific comment left me with jaw agape reading back through the results at the end of the day: “you still have to do your job, and it also will separate you from your students.”

It also will separate you from your students.

In my mind, we are already in a crisis of disengagement in education, with students feeling less connected and purposeful the longer they are in our schools.

Is our response to this very-real problem really going to be doubling-down on AI as a solution? To throw money at AI tools, encourage AI shortcuts for the sake of efficiency, and norm AI-generated feedback— all while continuing to gaslight those of us who remain skeptical about the capacity of education to ethically integrate a tool that was created through transparently unethical means?

Shouldn’t we instead think twice before choosing a path that could very well push our already-disconnected students even further away?

🗣️ “… defeats the purpose of a class.”

After seeing the near-consensus in how my sophomores reacted last week, the other day I tossed in an extra question to the “teacher choice evaluation” activity we were already doing in my AP Literature class: was I making the right choice as a teacher to not bring AI into our classroom—both in my own practices but also in terms of student usage?

On a 1-7 scale, 73% of my juniors chose a 7/7 (“Great choice!!!”) with only 7% expressing interest in what AI could offer to the classroom.

“Using AI feels lazy and like a shortcut, which defeats the purpose of a class," one student wrote in their reasoning. “We believe the students and teachers should work hard.”

In the final class of the day, when asked to reveal which number they arrived at on their own, 100% of students raised their hand for 7/7 to share their perspective that AI does not belong in the classroom—for the students and teachers alike.

Again: 100%.

For students and teachers alike.

🗣️ “…just another way that teachers are being hypocrites”

Given that the overall feedback on this topic was so overwhelming across all my classes, I guess the positive thing is that this offers even more clarity for me that the AI-resistant path of our classroom continues to be the right path for our classroom—now and going forward into next year.

Looking out at the broader discourse, though, and the current trajectory of where we’re heading?

Considering the lack of engagement and trust students feel within our education system and how this could very well be pouring gasoline on that metaphorical fire?

I am very worried about the direction adults are choosing to take education with AI and how it will make the work of creating and sustaining a purposeful, engaging classroom that much harder.

Our students deserve better. (And they know it.)

Do we?

Some good stuff I’ve read recently on this topic

Even with all this student feedback in my own classroom showing resistance to AI, I still believe the best possible thing is to stay engaged in the conversations around it as an educator—so here are five quite-different pieces of late considering AI in education that have resonated with me and that I wanted to make sure to share before signing off with this post:

“Catch them Learning: A Pathway to Academic Integrity in the Age of AI” by Tony Frontier: writing for Cult of Pedagogy, Frontier lays out one of the best framings I’ve encountered about how to consider and talk about AI as a teacher—and I’ve already pinned this myself to return to as I reflect on how to improve in this area in the years ahead.

“How I'm Teaching and Learning in an AI Augmented World” by : her newsletter (which you can sign up for at her website) is a must-read, in my opinion, as she is both generous and thoughtful in sharing her own explorations as a teacher and educator: “I’m still learning, but I’m certain that in this context too, navigating AI requires my engagement—not my withdrawal.”

“Addressing the Transactional Model of School” by : as always, Warner continues to be a must-read on all things writing and education, including this AI conversation. “While generative AI use has implications for academic integrity, its implications reach far beyond that relatively narrow frame,” Warner writes. “The bigger issue is what happens if we have successive years of graduates who have not had a genuinely meaningful educational experience.”

“More on AI and Academic Integrity” by : I read this piece and immediately wish I could click a button and have ELA teachers across the country read/discuss it—because there is so much here, particularly ideas that situate AI within the larger structures and discourse of education and very-real, authentic moves to make as a teacher within this AI moment (that were also very meaningfulI should note, before AI arrived in our classrooms)

“English Teacher Weekly for May 16th” by : in yesterday’s edition of Campbell’s phenomenal weekly newsletter that curates some of the best stuff out there to consider as educators (especially ELA folks!), he juxtaposed the importance of motive in student writing with a tentatively-more-hopeful outlook from a New Yorker article by Graham Burnett: ““The A.I. is huge. A tsunami. But it’s not me. It can’t touch my me-ness. It doesn’t know what it is to be human, to be me.”

This was a fantastic read, and I applaud you for having this conversation with your students. The thing that boils it all down for me was this: "Maybe we need to pause and talk with our students a bit more first. They have a lot to say."

I find that faculty are often dismissive of asking for student input and hearing what they have to say on the matter (for the record, I teach English at a CC). However, when students have buy-in, that's going to lead to better engagement and better learning. This all starts with having conversations such as these.

Marcus, I appreciate your commitment to surfacing student perspectives on AI and your transparency about your own uncertainties as an educator.

As a critical friend, I’d like to gently highlight a few biases that shape your analysis to deepen the conversation and to help all of us reflect on how we interpret student voice.

Teacher-Centric Framing: Throughout your piece, student comments are introduced and contextualized through your own lens as a teacher, not so much as a learner. For example, when you share students’ excitement about AI making work “easier,” you quickly pivot to concerns about authentic learning and academic integrity. This frames their curiosity as something to be managed rather than explored on its own terms. Offloading can be destructive, but it can also be useful, for example. It might be helpful to let some student voices stand on their own, even (or especially) when they challenge our professional instincts.

Selective Amplification:

You highlight student worries about AI undermining “real understanding” and “making school too easy,” which dovetails with your skepticism about technological shortcuts. Have you spoken about this with them before? Do they know your strong feelings? If any students expressed unreserved enthusiasm for AI’s creative or accessibility potential, those voices seem less foregrounded. This selective amplification can unintentionally reinforce your own cautious stance, rather than presenting a full spectrum of student thought.

Interpretation Through Adult Values:

When you summarize student responses, you often interpret them in light of educational values like “struggle,” “authenticity,” and “process.” These are core values, don’t get me wrong, but they’re also adult-centric constructs. For instance, a student’s desire to use AI to save time might reflect real-world efficiency skills valued outside school. It might even make sense for certain school assignments. I interviewed a high school junior this week for a piece of research I’m doing who uses ChatGPT every day—e.g. to help her with the verb system in a foreign language class. This is something we might miss if we only see it as a threat to rigor. We can’t know the reality without a free zone to speak.

Your analysis, while thoughtful and heartfelt, is shaped by a bias toward traditional educational priorities and a protective stance toward your own professional identity. This isn’t a flaw. Every educator brings their values to the table but it does mean that student voices are filtered, not raw. I encourage you to consider what it would look like to center student perspectives even more, perhaps by inviting them to co-author or respond directly to your reflections, especially those who disagree.

Thanks for opening up this important conversation!